Hawkeye King

CSE 576: Computer Vision

8/11/2006

Final project: Tracking Moving Vehicles

This project developed a system to track moving objects in a moving field of view- i.e. as a camera pans to follow the object. Two methods were explored that used two variants of the iterative Lucas-Kanade optical flow algorithm. The first found the optical flow between successive frames for all pixels and performed image segmentation and connected-components to find distinct objects. This technique was less than satisfactory, so a second method was implemented using roughly the same approach but on a sparse feature subset rather than every pixel.

Software implementation used the OpenCV computer vision library. This library allowed high-level conceptualization of this computer vision problem by wrapping many methods in a convenient API. Important function calls are indicated in the system description below, along with a description of their implementation and behavior.

Overall design of the system

The

system process subsequent video frames (in JPEG format) to determine the motion

of foreground “objects”. It is

important to note that objects are only distinguished by their motion against

the “background” and are not identified by color segmentation, for example. The background is assumed to be the “largest”

image region, determined by counting the number of pixels or features in the

region.

The

system process subsequent video frames (in JPEG format) to determine the motion

of foreground “objects”. It is

important to note that objects are only distinguished by their motion against

the “background” and are not identified by color segmentation, for example. The background is assumed to be the “largest”

image region, determined by counting the number of pixels or features in the

region.

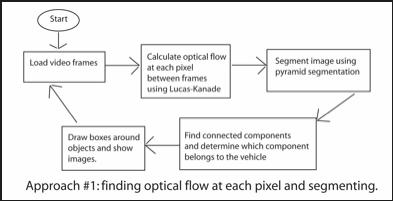

The first approach (see “Approach #1" at right), used the OpenCV implementation of the Lucas-Kanade optical flow algorithm to determine independent x,y motion components at each point of the grayscale image. The x and y vector components were merged into one image using the red and blue channels to hold each component. Color segmentation using pyramids (cvSegmentPyr() in openCV) was performed on the composite red-blue image in an attempt to segment out objects moving with unique motion vectors. The results of this technique were poor, as the motion vector image was too noisy. Thus, further refinement was necessary and a second method was implemented.

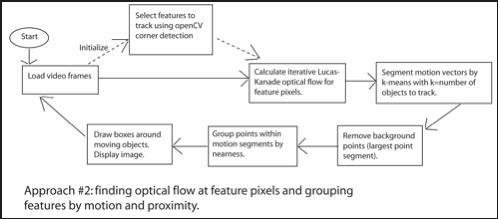

The second method first selected two hundred “corner” features with strong neighborhood gradients (cvGoodFeaturesToTrack() in openCV) and calculated motion vectors of just those features.

A

K-means algorithm was implemented to segment the features’ motion vectors. The number of groups was given as the

number of objects to track taken from user input at runtime (user specified K).

To determine seed values for

the means first the range vector magnitudes was determined, then k seeds are

selected to be evenly spaced throughout that range. K-means is then executed until the mean-shift from one

iteration to the next is below a certain threshold.

A

K-means algorithm was implemented to segment the features’ motion vectors. The number of groups was given as the

number of objects to track taken from user input at runtime (user specified K).

To determine seed values for

the means first the range vector magnitudes was determined, then k seeds are

selected to be evenly spaced throughout that range. K-means is then executed until the mean-shift from one

iteration to the next is below a certain threshold.

Once

the feature motion vectors are segmented, it was assumed that the “background” would

consume the largest portion of these features. Consequently, the largest group was determined and those

points are tossed out.

In

the remaining points a good deal of noise was observed, and this noise had to

be eliminated before the moving object(s) could be identified. A proximity-grouping algorithm was

applied to each remaining group to find distinct clusters of features. Sets of

points nearby each other (distance < 10% of total image size) were assumed part

of the same moving object.

Finally,

for each “object” a bounding box was calculated by the extremes of constituent

points. The box was drawn and the image

displayed.

Interest operators used and how they performed

The

interest operators for which optical flow was calculated were those showing

strong “cornerness”. To find

interest operators to track, openCV provides a useful function called

cvGoodFeaturesToTrack(). The

feature detector uses the Sobel operator to find derivatives at each point in

the image, and takes derivative maximums to find corners. These are good features to track, since

corners will tend to persist across frames of video.

Methodology for tracking

Feature

tracking was done in conjunction with optical flow calculation at each feature

point. Optical flow at each

feature point was calculated by the iterative Lucas-Kanade algorithm using

pyramids. The L-K algorithm determines motion vectors between two subsequent video

frames by finding pixel correspondence/displacement from one image to the

next. It leverages the fact that a

feature in the second image will be nearby its correspondent in the first

image. Using a downsampling

pyramid, motion vector magnitude can be reduced to a very small pixel distance,

simplifying determination of pixel correspondence. The “nearness” of features and similarity of images makes

feature matching and tracking far easier compared to matching between arbitrary

images.

Experiments and results

The

first approach did not yield very robust identification of the moving

object. The motion vector data

tended to be very noisy, since optical flow calculation for homogeneous color

regions is less accurate. It was

sometimes possible (~10% of frames )to see a distinctive region corresponding

to an obvious moving vehicle, but more often a segmented image looked just like

random noise. This approach was quickly

identified as hopeless and abandoned in search of a better solution.

Left: still image of moving truck. Right: motion segmented image (best results) using approach#1.

The second approach was far more successful in tracking moving objects on a moving background. Background removal was usually effective at removing most of the background points, leaving the interesting object points in the foreground for identification. There were some problems with edge effects, as features often “piled up” on the edges and were clustered in with the moving-object segments by the k-means determination. This was the primary reason why proximity clustering was necessary- to discern object features from edge effects.

Left: still image of moving truck. Right: motion segmented image (average results) using approach#2.

One problem this system had was with identifying multiple moving objects. The k-means segmentation of motion features preferred larger groups of motion vectors, ignoring smaller moving objects and grouping them in with larger ones.

Right: Approach #2 applied to another image, sometimes smaller objects are lost.

Conclusions

Two methods for object tracking were explored in this project. One used pure optical flow information to perform motion segmentation on an entire image. The next used the similarity of images and optical flow to perform feature tracking. The latter approach was highly effective in tracking features and identifying objects.

This feature tracking/optical flow combination could lead to a powerful combination of object recognition and tracking package.

References

[1]

Lozano, V. Laget, B. ,

Fractional pyramids for color image segmentation, Image Analysis and

Interpretation, 1996., Proceedings of the IEEE Southwest Symposium on, San

Antonio, TX, 8-9 April 1996, pp 13-17

[2] Jean-Yves Bouguet, Pyramidal Implementation of the Lucas Kanade Feature

Tracker

Description of the algorithm, Intel Corporation